AI is taking our jobs, but why are we surprised?

Blindsided by AI’s capabilities

So yeah, that’s it, people. The prophecies of Hollywood were true: machines are taking over. Not just our jobs, but our very lives. It all seems like we are destined to live out our pathetic futures in a jobless dystopia. Ah, well.

But seriously, how did we get here?

I’m sure everyone and their mother is now completely aware of the current hype surrounding AI.

There are those who are so enamored with current AI models and they now await AGI like the second coming of [insert preferred deity here]; and then there are those who are, to put it mildly, worried that we’re on a steep path straight into chaos and annihilation. There’s also a bunch of people in between those two opposing sides, as is usually the case.

However, there are also those who are simply unimpressed by the present state of AI and think the current boom will actually not lead to much.

The thing is, if you consider the historical context surrounding advancements in AI, you can’t really blame them. The history of AI is riddled with inflated hype followed by disappointment. (That, and also there’s general human ignorance, but let’s not go there right now.) Suffice to say, no wonder some people are skeptical this time around, despite the seemingly undeniable promise AI has demonstrated recently.

So I certainly get both the apprehension towards AI and the attempts at downplaying its current abilities and future scope.

However. Not too long ago, we were all absolutely sure machines could never produce real art. We were so confident that creating art was an inherently human ability. Some believed the very soul of the artist was the source of our creativity. Machines can’t have souls (right?), therefore, we are safe.

Well, we all very well know how that turned out. Ouch.

But seriously. Friggin’ ChatGPT is spitting out poems. POEMS! Midjourney is creating digital pictures, and, oh, there’s generative music, too. Even if you’re not yet fully convinced, keep in mind that these things are still in their infancy. They’re goddamn babies that out-art nearly every human on the planet! And whatever the objective or subjective quality of their output you, fellow artist, may perceive, they’re only going to get better as they age.

I think I can pretty surely say that it caught most of us by complete surprise. It sure did surprise me. In fact, I don’t think I’ve ever been so surprised in my life.

I hate to say it, but human art is slowly becoming replaceable, and we’re really just scratching the surface.

How come we ended up blindsided by these AI models? I think the problem lies in the rollercoaster of hype and letdowns we experienced with technological innovations in the past. We’ve been duped before, and it made us doubt what AI could achieve. But getting disappointed five, ten, twenty times in a row doesn’t mean we won’t get caught by surprise next time around. We stopped paying attention, and it ended up costing us our jobs.

Hype that led to disillusionment

Now that you’re properly rattled, let me try to approach this topic with a little more nuance.

Of course, things need not be as ominous as they might sound. Sure, the arrival of these AI technologies feels quite sudden and abrupt, catching us off-guard. However, as is often the case with rapid technological advancements, it is to be expected that we will embrace these new changes and get used to them, after a certain period of adjustment.

Every technological transition has always had its naysayers and doomsayers (?), but it’s equally true that most of these transitions have led to a net gain for society.

Bearing this in mind, let’s dive a bit into history and explore humanity’s imperfect understanding and often inaccurate predictions of technological potential, particularly in relation to AI. Maybe some historical context might shed some new light on current happenings and give us a better grasp of our technological circumstances.

I’ve mentioned that there have been instances in the past where innovations were overestimated right off the bat. But why the high expectations? They seem rooted in our own human fallibility; it’s just too easy to become swept up in the potential of new technology, to imagine a world in which our every need and desire is met by the wonders of science and engineering. This kind of overconfidence lends itself well to idealization of new technology, which sometimes makes us overlook its limitations and drawbacks.

Take the Georgetown-IBM Translator as an example. Hailed as a breakthrough in machine translation in 1954 and triggering a boom in investment and media interest, it eventually turned out to be far less capable than advertised; expectations for machine translation soared to unrealistic levels before crashing down to earth. In the 80s, expert systems were expected to revolutionize fields such as medicine and finance. Both of these contributed to the troubled view of artificial intelligence as a field of research and ultimately led to periods of stagnation and disillusionment with AI, known as AI winters.

Tim Menzies, AI researcher, explained the AI winters through a “hype curve”. AI reached a “peak of inflated expectations” early on, in the mid-1980s, then went through a “trough of disillusionment” before reaching the “plateau of profitability”. In other words, AI got hyped, didn’t live up to it, funding and research dipped, and it eventually settled somewhere in the middle of the curve.

This progression is all too familiar in the world of tech, when there’s hype surrounding a particular innovation that reaches a fever pitch, only to be followed by a sudden awareness of its limitations and shortcomings before settling at a sort of equilibrium.

And while the consequences of the hype curve may seem relatively benign in some cases, the reality is that these overestimations can have far-reaching implications for the future of technological progress. As we’ve seen with machine translation and expert systems, exaggerating the potential of new technologies can slow down development and innovation, delaying real breakthroughs and making people lose even more faith in the technology.

Skepticism as the default response

The duality of man: easy to hype, easy to disappoint.

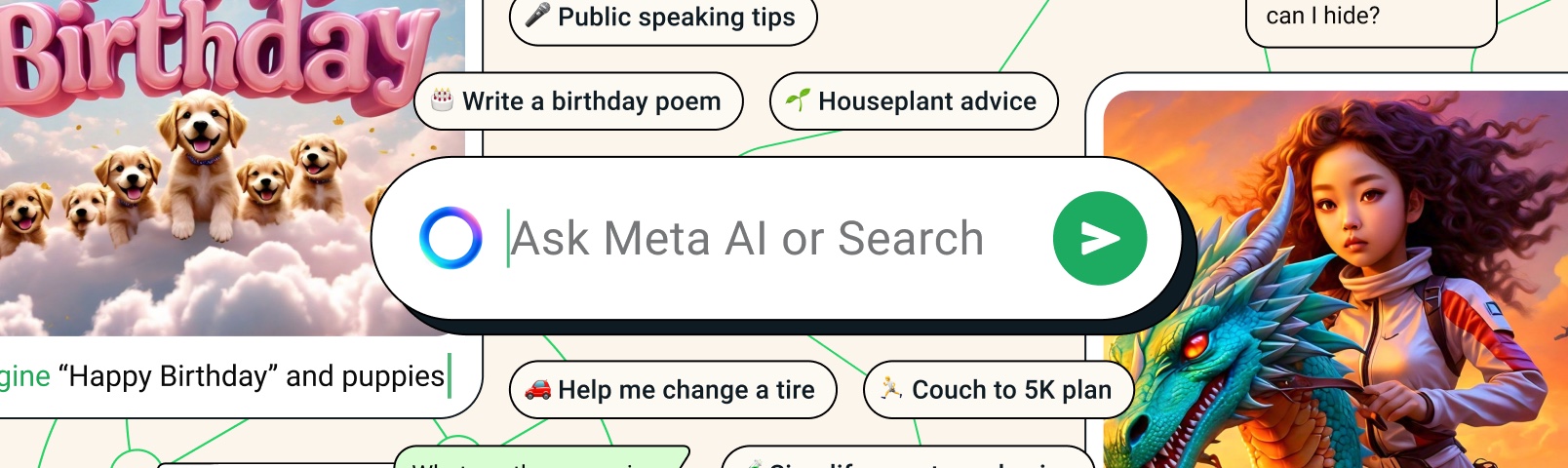

People hailed the early machine translation models, believing they could truly match human capabilities, just to find them underwhelming quickly after. Siri and her kin seemed like the next best thing short of the Computer in Star Trek, only to end up anything but. So now we have some people who are still dismissive of ChatGPT’s capabilities (“Fool me once…”), even though it can be incredibly capable and convincing, showing us glimpses of an exciting future.

But the art world knows what’s up, and how this kind of skepticism can acutely backfire. Of course, now that there’s AI art everywhere, the goalposts are shifting; we associate artistry with uniquely human traits such as emotion and consciousness, so we naturally don’t want to admit defeat and accept that non-human art could possess any real artistic merit. But we’re now also witnessing AI art winning contests, and there are lawsuits emerging left and right. AI is definitely doing something, then, if not art.

So, there’s a continuous reshaping of our perceptions of AI’s potential, driven by our limited understanding of what machines can achieve—until they show us exactly what they can. And then, we scramble to adapt. Jobless.

Along with past hype and disappointment, our understanding and predictions of AI’s progress have been further shaped by our biases and cognitive limitations, as well as our ignorance regarding the actual state of development of certain technologies. If we’re not that knowledgeable about the progress made with large language models, can we even grasp how exactly an AI might be able to write a convincing article or novel, and how disruptive that could be to numerous jobs?

Human-centered conceptualization of technology

Is this why it seems as if we’re often failing short in our predictions when it comes to technological advancement? I believe the ways in which we envision progress and innovation are indeed easily hampered by our human-centric perspective; they are inherently tied to our conceptions of the human condition and the limitations we perceive in our own abilities. In other words, how we think about progress and innovation depends on our beliefs about what we can and cannot achieve as humans.

It’s certainly not as if we lack imagination; there’s a myriad of sci-fi works testifying to the creativity with which we humans dream of the future. Not to mention sci-fi’s undeniable influence when it comes to inspiring actual technological advances.

But when we try to imagine concrete, real-world future technologies, we tend to do so within the boundaries of our current knowledge and abilities. We may struggle to conceive of advancements that go beyond these limitations, which can then lead to underestimations or inaccuracies in our predictions.

There’s a distinctly human disconnect between giving plausibility to statements like “one day we’ll have flying cars just like in The Jetsons” while on the other hand considering fully automated self-driving as an almost insurmountable challenge, despite concrete improvements in the field. It definitely appears easier to accept wildly advanced technology when it’s a nebulous concept of a distant future than it is to accept leaps in innovation that, in contrast, seem to be just around the corner (or practically already here).

Basically, the farther removed a concept is from our immediate reality, the more readily we accept it, because it doesn’t challenge our current understanding, biases, and beliefs.

AI back in style

So, for quite a bit of time, AI was considered a bit of a dirty word.

Decades have passed since the Georgetown-IBM translator, and progress has been made. Statistical machine translation didn’t give us completely fluid, human-like results for the most part either, but neural networks have made sure we’re now past that stage as well. Gone are the times of clunky Google Translate results that were sometimes so ridiculous they would make us sneer and tut; suddenly, a reliable, universal, real-time translator akin to those seen in science fiction doesn’t seem that far away.

Electric vehicles were once only an interesting concept; now they’re self-driving (and self-crashing, too). I’ve already mentioned AI art enough times, so I’ll just add that I, for one, am often surprised by the quality and creativity of its output, despite all its currently apparent flaws. Needless to say, I’ve also been spending quite some time chatting with ChatGPT—actually, I’ve been spending so much time with it that I feel like my typing speed went up a level.

All in all, I can’t help but feel like things are considerably different this time around—as I think is plainly obvious from the very beginning of this article. Therefore, I’d like to look at the current status of AI, this current AI “spring”, in another way. We might be on a distinct type of curve; after a period of slow progress, AI, or specifically large language models, are going through a phase of rapid growth. At the end of the curve there might very well be the expected plateau of maturity which innovative technologies experience sooner or later, but the thing is, we currently have no idea where on the curve we are.

Are we nearing the upper limit of AI? Is future progress going to be more gradual than revolutionary? Well, when you look at everything that’s been happening these past months, doesn’t that seem unlikely? Since the pace of progress is constantly evolving and it’s difficult to predict with certainty where the field of AI is headed, we just might be somewhere at the beginning of a steep climb and the true potential of AI is yet to be fully realized.

Avoiding self-imposed limitations

If we’re really just at the start of a historic AI boom, it’s definitely not something to be taken lightly. It’s important that we recognize our biases towards AI, because, if we play it right, it will serve us in aspects that might really make a change in the long run.

As humans, we think of ourselves as the pinnacle of evolution. After all, we have complex brain structures and intricate thought processes that have allowed us to create art, science, and technology for the benefit and progress of humanity.

Still, we are what we are, “hardware-wise”, and with our biological makeup come inherent limits to our perception and cognition; they are not infallible. We have biases and prejudices that color our judgment, and we often make mistakes in our thought processes. This can prevent us from envisioning a future that is vastly different from the present, and these limitations extend to our understanding of technology as well, and all the ways in which it can help us overcome challenges we’ve been grappling with for a long time.

AI has the potential to rise above these limits. Just looking at creativity alone, our imagination is limited by our past experiences, understandings, and knowledge. On the other hand, AI can generate concepts that go beyond the constraints of human cognition; developed right, it can rise above human biases that can inhibit our ability to think outside the box and come up with original ideas. This could lead to new forms of art, design, music, and literature that were previously unheard of. AI could really push the boundaries of creativity, and, in the process, even transform our understanding of what it genuinely means to be human.

That’s all great, but… What about my job?

Past instances regarding AI might’ve ended up being overestimations, but I think it’s pretty obvious by now that it wouldn’t be wise to dismiss the recent hype as a boy-cries-wolf situation. (The art world and the film industry surely don’t consider it as such anymore.)

It’s okay to be skeptical, but be wary of ignorance. I think we all understand now that we are limited when it comes to conceptualizing technological potential. We must be realistic and measured in our expectations, but we must also always remain alert to the changes that technology will bring about when it comes to our jobs and our lives.

And yet, while these looming changes might appear intimidating, it’s best not to get too caught up in doomsaying either. There is so much more we can do as humans, and we’ll continue uncovering novel ways of contributing. The prospect of humans becoming entirely redundant and jobless (if it ever happens) is not the immediate concern; we should focus on the potential that these technologies offer instead of fixating on being replaced.

Proficiency with AI technologies is a skillset that’s obviously becoming increasingly important. Some people still encounter difficulties in this realm, or are still too dismissive of AI; however, cultivating an open-minded approach will undoubtedly give early adopters an edge over those who struggle and lag behind.

Therefore, the transitional phase between now and the AI future is going to be pivotal for many. During this period, people who readily adapt will simply surpass those who don’t. Embracing this technology is paramount; one must wholeheartedly adopt and master it. Make sure to leverage it as a tool to elevate yourself further and accelerate your own progress.

As we humans continue to grapple with these sudden, strange realities, I think we should, above all, maintain a sense of curiosity and wonder. We must be willing to take risks, to embrace the unknown, and to explore new frontiers. After all, it’s through our willingness to dream big and push the boundaries of what is possible that we can truly profit from the transformative potential of technology instead of falling victim to its unrelenting pace.