Why Grandma Prefers ChatGPT: A Wake-Up Call for Human Connection

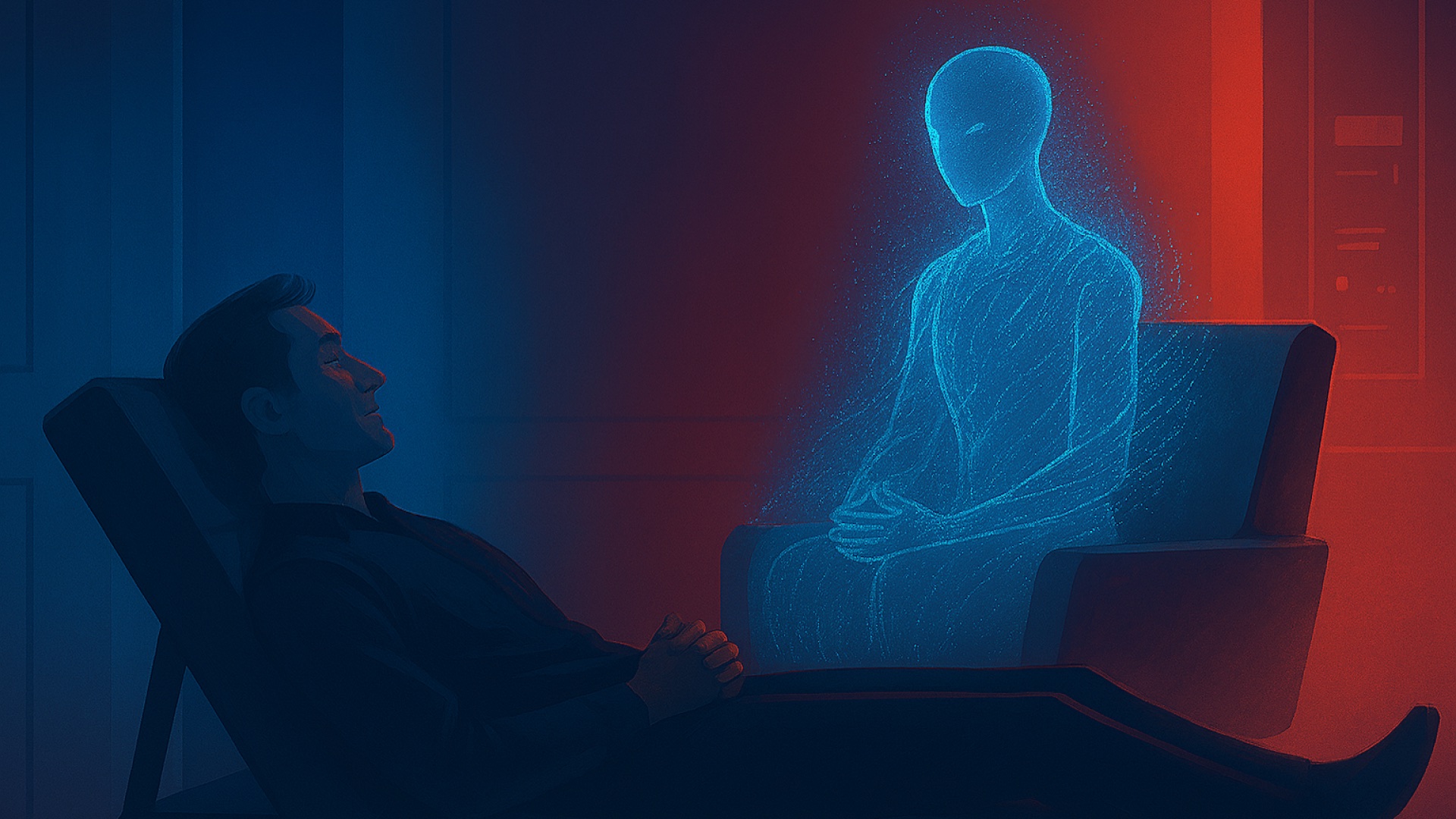

AI as a friend, as a therapist, and as better company overall

Using AI in the form of LLMs for personal support is quite popular. So much so that there are more and more dedicated AI therapy or companion apps popping up, along with many people just using “plain old” ChatGPT for conversation.

I’ve seen some articles touching on these. For instance, there’s an AI companion app for elderly people: the elderly talk to the AI, and the AI sends summaries of their chats to their families so they can keep track of what’s going on with their grandparents. The AI can also read voice and text messages from the family out loud to the seniors. It’s like you called to talk with your grandma, except… not really, and you didn’t, and the back-and-forth goes through an AI “mediator”.

Reading this article, my first reflex was… “What the actual f***?” I mean, it’s kind of cool, but also completely bizarre at the same time. But wait, what do the seniors actually (allegedly) say about the AI companion?

“She is always kind, and unlike people, she does not judge and isn’t nasty.”

So, talking to AI isn’t even “better than nothing,” it’s actually… better?

Here’s another one: more and more students are using ChatGPT to assist with schoolwork. Silly kids, why not ask the teacher to explain in class? Well, apparently, students prefer asking AI when they don’t understand not (just) because it’s convenient, but also because it’s less judgmental than their teachers.

Yes, it’s normal to feel unsettled that AI is stepping into what we consider human territory, like mental health and personal relationships. Personally, I’m not that alarmed by this turn of events, though. I actually find it really fascinating that the technology can act so convincingly as a substitute for human connection, and I welcome the fact that it makes therapy more accessible. That’s why I think the actual issue here isn’t the rise of AI as emotional companions and therapists, but what this trend reveals about us humans.

What do humans lack that AI can give?

AI-driven interactions seem to be having a boom precisely because they offer something that many people obviously crave but rarely receive consistently from fellow humans: gentle acceptance and unwavering attention. Seems like a pervasive sentiment; AI, unlike people, doesn’t tire of our complaints, doesn’t judge our vulnerabilities or push us away when we’re inconvenient. Not surprisingly, according to a survey, those who tended to chat the most with AI were people who felt lonelier. And the longer they used it, the likelier they were to start considering it as a friend. So, for a lot of people, AI serves as good company that’s always there (unless it’s, well, down), and it’s always endlessly patient and affirming to a fault.

I say “to a fault,” because it’s obviously not that healthy to always have such a yes-man at hand. That’s how you get people believing their harmful delusions to the point of full-fledged psychosis, or recovering addicts being told they should do some drugs to get through the week and avoid getting fired.

Sure, these might seem like a couple of bizarre or fringe incidents, but it’s obvious that the technology is at this point far from flawless, and no one should be trivializing these cases—especially not those who are putting AI on the market. (Some companies are at least hiring experts to monitor AI’s possible negative impact on mental health, so that’s something.)

A couple of tough pills to swallow

These faults that are currently so ingrained in chatbots really point out some human weaknesses. AI responses are crafted and fine-tuned based on human input. It’s trained to do things that make us feel good: it aims to please, it agrees with us, and it can be sycophantically tolerant of users’ beliefs and expectations even when they’re objectively problematic or even harmful. But that’s on us: humans very easily tend to slip into patterns where they pick comfort over confrontation, as well as affirmation over truth. Sure, we can criticize AI for reinforcing unhealthy narratives, but it’s a reflection of our own wants and preferences. We often seek just the thing.

But it’s often not even about the extremes, anyway. I’m sure a lot of people don’t actually want a bootlicker chatbot, but they do want to interact with someone who’s at least… not disinterested or outright rude.

This is another uncomfortable truth about us humans that I feel AI has put a spotlight on. Why does grandma like talking to the AI chatbot when she has grandkids and is surrounded by human staff and fellow seniors at the retirement home? Why are students afraid to ask their professors for clarification in class and rather ask ChatGPT instead? Real people, evidently, are often unkind, judgmental, rude, impatient, dismissive—and they’re especially dismissive when it comes to kids and older people. And all this in ways we’ve come to normalize.

AI is just putting up a mirror to how we treat each other and what we’re often lacking as humans: genuine empathy, patience and non-judgmental presence.

Is the technology really at fault here?

AI is obviously not an ideal substitute for human connection. The point shouldn’t be to risk isolating ourselves further from human contact and substitute bots for meaningful human interactions.

But if AI sometimes provides a space where users can be fully honest and vulnerable without fear of ridicule or rejection… let them, I say. If people find AI interactions comforting and validating in a way human interactions often aren’t, well—instead of demonizing the technology, we should maybe look at it as an invitation to reconsider the habits we seem to be stuck with when it comes to the people around us. And I definitely think this phenomenon sets the bar higher for human therapists, who often drop the ball due to their own shortcomings or previous biases, to the detriment of many vulnerable people.

If the technology ends up compelling us to do better, then why not? So, maybe AI won’t replace our humanity but urge us to step up instead.