AI will be your new Wikipedia (and learning might suck just a little bit less)

Answers to questions, the modern way

Online platforms have had a profound impact on traditional print encyclopedias and the way we seek and consume knowledge, leaving traditional print encyclopedias in the dust. Literally.

What do people say when you politely ask for the source of some offhand claim of theirs? They sure don’t say “Just encyclopædia britannica it.” Google’s search engine has become so synonymous with instant information retrieval that it’s a full-fledged verb in many languages. A whole neologism for “type a query on this proprietary platform and, within the blink of an eye, be presented with thousands of hits.”

With a vast array of information readily accessible at our fingertips, it comes as no surprise that print encyclopedias have experienced such a decline in popularity and relevance. Gone are the days of flipping through heavy volumes and struggling to find specific information; people can now instantly access regularly updated content in an extensive range of topics with a simple online search.

And what’s more, traditional encyclopedias with their limited publication cycles and outdated information pale in comparison to the dynamic, real-time knowledge available online. With the click of a button or a tap of the finger, users can dive headfirst into the latest articles, groundbreaking research papers, thought-provoking blog posts, and a wealth of other sources, so it’s easy to stay up to date with the most recent developments in their fields of interest. No dusty old tomes required.

Another potential shift on the horizon

Shifting from print to online encyclopedias and other sources was inevitable, really. It’s hard to beat a collaborative knowledge repository like Wikipedia when it enables anyone with an internet connection to read up on the collective, curated expertise of others. It’s certainly a textbook example of technology doing good.

But who’s to say there couldn’t be another potential shift in the way we access knowledge? Perhaps due to some currently very relevant technology with obviously vast potential?

I’m sure you already know exactly what I’m referring to. These are indeed some quite turbulent times, as generative AI models such as LLMs and AI art systems have shown promises for various use cases that could be disruptive to many established workflows. Content creation, customer support, creative writing, art and design, you name it—they will all feel the effects sooner or later, if not already.

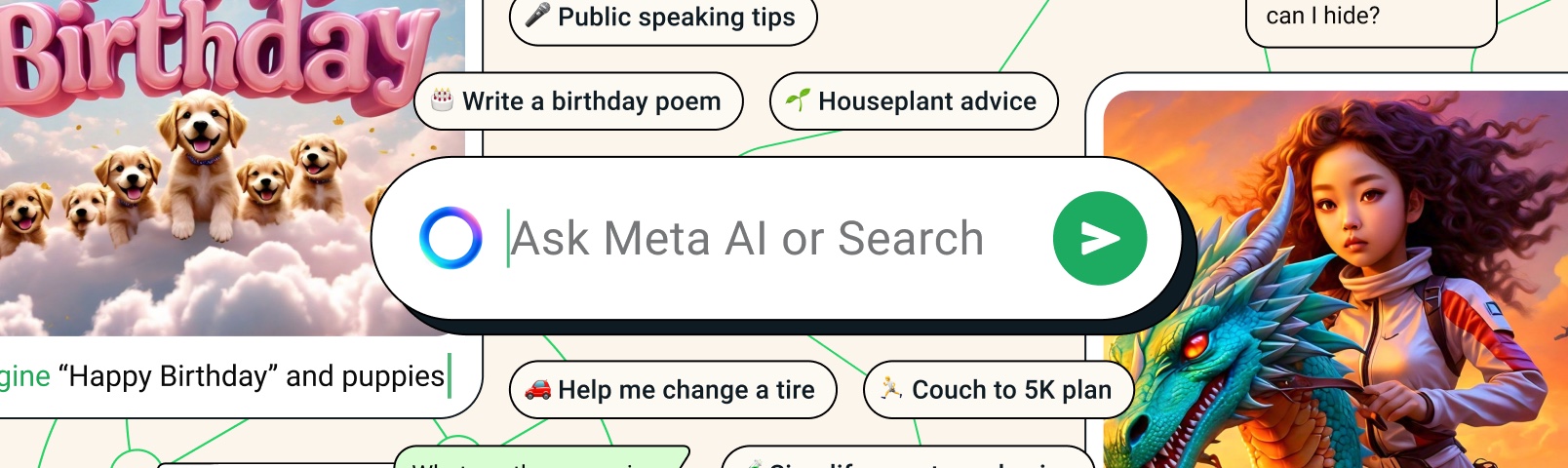

It’s safe to say that AI has pretty much sealed the fate of many industries in the years to come, and—considering that the main usage of AI tools is “answering questions” according to this poll by the Verge—I’m quite convinced that the same could happen to various media, including online encyclopedias.

Reversing the instructor-learner dynamic between humans and AI

Currently, LLMs like ChatGPT are absorbing knowledge from diverse sources that include books, articles, and pages like Wikipedia. These serve as a vast corpus of information that helps the AI models understand language, context, and various domains of knowledge. AI is diligently learning from us now, but it’s only a matter of time before this dynamic reverses and we start using AI to inform and educate us instead.

As it stands, language models like ChatGPT can already provide a wealth of information. With time, they actually might become capable of curating knowledge on (mostly) their own—with certain caveats. Couple this with the ease and convenience of getting information on all kinds of topics from one, seemingly omniscient source, and it doesn’t seem like that big of a leap to consider a future where an AI service acts as a central communication, information, and education hub. What this means, though, is that it could render platforms like Wikipedia redundant to the general public.

Easier said than done, though. One of the fundamental challenges of replicating structured and curated knowledge solely through AI models is maintaining credibility. Wikipedia has thrived because of the contributions and reviews of its large community, but its approach is not exactly foolproof. Fact-checking and minimizing biases—problems that Wikipedia has—become even more pronounced when relying solely on AI.

Of course, relying solely on AI is not a necessity. While AI models could curate content to some extent, humans could absolutely still play a significant role in ensuring accuracy, reducing biases, and addressing complex nuances. Striking just the right balance between user contributions and centralized editorial control would go a long way for ensuring credibility.

Promising developments

AI doing the curating or encyclopedia-creating itself means that the AI model that would take on the task would require a wide range of skills like reasoning and perception, as well as commonsense knowledge and social intelligence. So, for quality self-curating endeavors we would probably have to wait until an AI model demonstrates a reliable and consistent propensity for these skills.

In the meantime, hybrid models that combine AI with traditional resources could enhance usability of existing online encyclopedias and similar platforms by providing a natural language interface or generating summaries of complex topics. Some tasks that seem very doable and implementable even now, for example, is providing on-the-go translations for Wikipedia information that has not been translated beforehand (as in, it doesn’t have a dedicated page in the target language), or consolidating information from Wikipedia pages in different languages for an even more comprehensive take on certain topics.

If this still seems like a reach when you consider the various idiosyncrasies and blunders that ChatGPT at present exhibits sometimes, there are some current developments that hint at a boost in relevant aspects even in the short term. Prompt-engineering approaches like ReAct can enhance the capabilities of LLMs by interleaving reasoning and taking action. What this means in practice is that issues of hallucination and error propagation prevalent in chain-of-thought reasoning as employed by ChatGPT can be overcome even by a simple solution such as making it interact with a Wikipedia API.

Building up from these promising advances could certainly give viability to the future development of an all-encompassing AI-powered central hub like the one I mentioned in the beginning.

AI tutors for immersive education

To further paint the vast potential such a service would have over online encyclopedias in their current form: AI models like LLMs, or perhaps even specialized AI education hubs of the future, could certainly revolutionize education. While they can’t replace social interaction (or, at least, it’d be nice if they didn’t), they could provide some of the benefits of one-on-one tutoring and active learning. Having an AI-created avatar read out an AI-generated lecture is already plausible, and AI video generators could be used to make documentaries out of any kind of educational topic, which could greatly help information retention. Not to even mention VR tech and the possibilities it could have for spicing up various educational topics.

Students could have conversations with historical figures. Why leaf through a bunch of text to learn something about Napoleon when you could just ask him yourself? There’s no denying that experiencing a historical event as if you were there is certainly way more engaging than some paragraph in a text book devoid of any emotion or intrigue. And this variety in audio-visual tools could be of great use to neurodivergent students as well, or those with disabilities. It could also help with language learning—speech synthesis or video avatars could be used for real-time conversation.

Another advantage of such AI models would be their ability to provide personalized pacing in education. In a traditional classroom setting, there will more often than not be at least some students who are bored because the material is too easy for them, as well as a number of those who are overwhelmed because it’s too advanced. An adaptive learning approach powered by an AI model that dynamically adjusts the difficulty level of the content based on the student’s proficiency could ensure that all students are always appropriately challenged, preventing boredom or frustration and fostering continuous progress.

The Socratic Method against the Dunning-Kruger Effect

Maintaining a level of challenge when presenting information to learners is, I think, quite important. And being almost mystifyingly challenging at times is something that online encyclopedias definitely have going for them, I’ll give them that; I’m sure it’s quite the universal experience to come across a Wikipedia article where you have to open 10 more tabs to get a bearing on the topic before you’re even finished with the intro.

Chatting with LLMs is, currently, a different experience. When information is readily available through AI models in an overly simplified format without being as challenging as they could (or should), there’s a risk of developing a shallow understanding of topics, and people’s inflated sense of comprehension without truly grasping the intricacies wouldn’t really foster any kind of intellectual humility.

This might inadvertently contribute to more instances of the Dunning-Kruger effect; a cognitive bias where people with limited knowledge or understanding of a subject overestimate their competence in that area. In other words, people may develop a false sense of comprehension when it comes to complex topics due to reduced exposure to “unknown unknowns”, which are aspects they are not aware they do not know. Those 10 tabs you opened while trying to comprehend the first paragraph of that Wiki article? Those were all unknown unknowns—but now that you’ve come across them, they’ve become your “known unknowns”, and now you at least have an idea just how little you know about the topic.

Skip that whole step and now you might think you’re properly informed because you understood a watered-down summary. And people are already less likely to engage deeply with information and more likely to skim or jump from one piece of information to another. All of this to say that we’d probably get a lot more people who confidently speak about topics they only have a surface-level understanding of.

To address this, the Socratic method can be employed, at least in formal educational contexts. It involves a series of probing and thought-provoking questions posed by one person to another in a dialogue. The concept behind this method is that engaging in such a dialogue with an AI model would encourage productive struggle and engagement and lead to more effective learning experiences, prompting users to delve deeper into their understanding and uncover hidden complexities. And this is exactly what they’re doing at Khan Academy, actually, with their personalized tutor chatbot Khanmigo.

Changes in knowledge acquisition and the nature of knowing

The digital age has certainly brought about an epistemological shift, changing how knowledge is acquired and accessed. Knowledge is transitioning more and more from being solely stored in the mind to becoming readily available through external devices; basically, information storage and recall in the mind are being augmented by the ability to find and retrieve information using tools such as external devices, online resources, and AI models.

This shift towards externalizing knowledge raises questions about the nature of knowing and the impact on knowledge retention. Sure, there’s digital amnesia; caused by relying on external devices for information recall, it may have effects on memory and information storage. However, with information generally being just a few clicks away, the emphasis might shift from memorization to the ability to locate and utilize information effectively.

Consequently, the nature of knowledge itself undergoes a transformation, becoming more dynamic and adaptable. Not to mention that there might be some empirical indication that delegating information to external devices might actually benefit creativity and productivity, allowing people to focus on higher-order thinking and problem solving.

Do you really need to memorize a bunch of technical details if you have your trusty AI hub at the tip of your fingers (or at the corners of the HUD of your fancy future smart glasses)?

Higher-level thinking in lieu of cognitive loss

Then again, there’s also the risk of becoming overly dependent on digital tools if we increasingly rely on them for information retrieval. Losing access to them, temporarily or permanently, might make for some non-ideal situations at work or in life in general.

There’s another thing, as well: does relying on external tools for information storage and retrieval potentially impact our cognitive skills? In some cases, yes; for instance, research has suggested that the use of GPS navigation systems can impact our spatial memory and navigation skills, leading to spatial cognitive deskilling. The implication seems to be that, similarly, if we’re always relying on search engines to find information, our memory recall and critical thinking skills might not be exercised as much, leading to some form of cognitive loss.

But then, did the rise of calculators make everyone worse at math? Well, research says that it’s better for kids aged 5–10 to use calculators in a more limited capacity, but older kids should actually have frequent access to them. This is because, contrary to common misconceptions, calculator use does not hinder students’ skills in arithmetic—it can actually boost their calculation skills and capacity for higher-level problem solving and exploration. And you can’t deny that calculators and computers have played a crucial role in driving technological and scientific advances. They have expanded our computational capabilities and opened doors to new possibilities in various disciplines.

Technology marches on

While it’s important to acknowledge the possible downsides of all the phenomena surrounding these changes in knowledge acquisition, one thing that is entirely indomitable is the unstoppable pace of technological innovation. It’s not about how it will become a reality, but rather about how it will be utilized, and how we will adapt to it.

An AI-powered knowledge hub could potentially provide a centralized source of communication, information, and education, and the benefits of personalized learning and interactive educational tools can’t be dismissed or downplayed in any reasonable way. These advancements could revolutionize the way we acquire knowledge and provide enhanced accessibility for a wider range of people, including neurodivergent students and people with disabilities, and this is an opportunity that shouldn’t be passed over.

The notion that such a knowledge hub would render online encyclopedias redundant to the general public is a complex proposition. But I do think this could be a very real possibility given the incremental nature of advancements in artificial intelligence, of which we’ve already seen many that are highly promising. Of course, in this rapidly evolving landscape, striking the right balance between AI-driven advancements and human involvement will be essential to ensure the credibility, accuracy, and accessibility of knowledge for the benefit of society as a whole.