Using synthetic users for UX research (because who needs humans, anyway… right?)

Sampling synthetic users

AI has certainly shown a lot of promise when it comes to future opportunities for UX designers. While there’s still no satisfactory dedicated AI tool for designing user interfaces, there’s a whole slew of tools that can help with various aspects of the design process—some successfully, some not so much.

But there are some curious developments going on in UX research, as well. I’ve noticed a number of advertisements and articles popping up about AI-powered UX research tools. For instance, there’s Synthetic Users, which, as might be obvious from the name, provides you with AI “users” that you can conduct your research on. Apparently, this “saves you from headaches” you might get by dealing with actual people.

As if AI can’t give you headaches! It might sound strange that a UX researcher would want to avoid real humans, though—even if it’s true that people can be tough to handle sometimes. And most of the critique towards these AI tools revolves around their core premise being wildly incompatible with some core tenets of UX; if the goal of UX research is to make sure we’re creating products that meet the needs and desires of human users, why use AI instead of humans? It’s perfectly legitimate to ask: how is that user-centric?

Of course, that’s not to say AI doesn’t have good uses in UX research, and a good dose of future potential when the technology matures some more. There are already some good applications for it, and as the technology progresses, we’ll surely have even more ways to incorporate it to our benefit.

As it stands for now though, using synthetic users to gauge how actual human beings will respond to your product seems to most people like a very curious choice. But, staunch skepticism aside—would there be actual good reasons to substitute testing on humans with AI?

How these research tools work

Before we get into it, I believe I should explain what use-cases of AI in UX research I’m talking about exactly. For a brief overview of how these AI tools work, NN Group is a solid source.

As they mention in their article, we mainly have AI insight generators and AI collaborators. AI insight generators can be used to summarize the transcripts of user-research sessions. However, they can’t take into account any background info about the product or the users. AI collaborators, on the other hand, are like a more sophisticated version of insight generators, because they can actually accept some contextual information.

Of course, usability testing sessions can’t be correctly analyzed by the transcript alone. A great deal of usability testing is seeing and hearing for yourself what the user is doing and saying while using your product. Obviously, a lot of this information is lost if only the transcript is being taken into account.

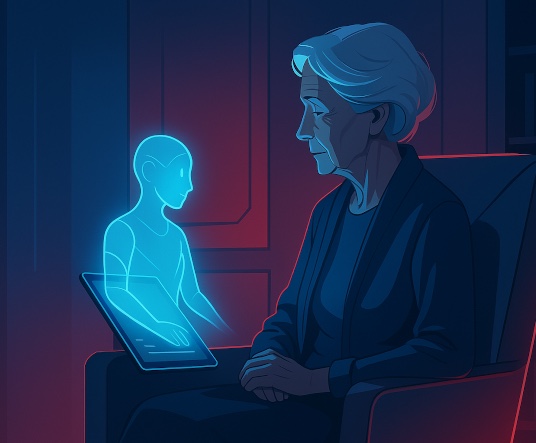

And then we also have silicon sampling, which Synthetic Users from before are an example of. It’s really as simple as substituting human respondents with an LLM like ChatGPT and the like.

There’s obvious appeal to these tools for some specific parts of the research process when it comes to efficiency and streamlining the work. However, while all these tools are promising, and some are even already producing decent results, they’re also still very much in their infancy—and there, quite expectedly, lies the problem.

“Hey, at least it’s better than nothing”?

So, considering the stage of development that they’re currently in, can we really rely on these tools as substitutes for actual humans—be it on the researcher or the tester side?

Well, that might not be the best course of action since, for now, they’re definitely not the best when we want to minimize muddying the findings of UX research with inherent biases and sub-par data.

That doesn’t seem to stop people from trying to capitalize on the idea, though. Obviously, the biggest reason these tools are interesting is because of their ease and practicality. They’re also being touted as “better than nothing” if you didn’t really plan on having real users test out aspects of your product. And, unfortunately, UX research is an area where funding is very often lacking; no wonder, then, that synthetic data might be championed as a remedy for insufficient or non-existent research in projects that apparently can’t afford to conduct it.

Something could be said about companies that would rather not like to spend a lot of money on researching their users, and who’d use synthetic data only because otherwise they’d have none at all. Maybe these are currently the actual main clients for these tools. (Just as a light-hearted aside, I’d like to mention this entertaining interaction, where an LLM was asked about using AI for artificial UX research—and it pretty much outright said that AI tools for replacing human research aren’t meant for professional UX researchers, but for those that want to go the faster and cheaper route in lieu of quality. Straight from the horse’s mouth…)

So, is that the end of the story? AI personas and synthetic data have no place in UX research, and anyone who uses them is bad and should be ashamed? Well…

Simulating humans for their (actual) gain

Now, I don’t want to seem like the devil’s advocate for just about every controversial implementation of new technologies, but I’d still like to mention some thoughts I have about this.

The way that technology has progressed throughout the years means that we’ve already had lots of instances of simulations and tests where we successfully don’t use real humans. This is often due to very obvious moral reasons, like using crash test dummies when testing car safety, but in some cases it’s more because of cost and practicality. For a rather simple example of this, think of material durability testing; for fabrics, instead of having people wear garments under various conditions to test durability and longevity, which would be far too slow and expensive, wear and tear is simply simulated through mechanical means.

Don’t get me wrong, I’m not saying users are as complex as a pair of pants and that their behavior is easy to simulate. But we’re starting to have more and more data about people in general which makes the task easier.

Amassing vast amounts of user data has its own problems that I won’t be going into now, because it’s a rabbit hole that I couldn’t see myself getting out of easily. I’d just like to mention that simulations based on this kind of data can actually have genuinely positive uses. Group behavior is something that’s been the target of simulations that are then used for testing measures for crowd control, for instance. And training the computational models on real human data means that the simulations end up reaching a level of accuracy that’s actually useful.

So, I wouldn’t really be surprised if actual user personas could be replicated one day with high accuracy. Some of the UX tools already available tout 95% overlap with actual human feedback. Whether that’s really true or not at the moment, it’s going to be decidedly true at some point in the near future. And if one can get practically the same results faster and cheaper, why would one bother people with these tasks, then?

There’s another thing that’s often mentioned as a critique of AI tools; their propensity for hallucination. And this is an actual problem that needs to be ironed out of all these tools for them to really be consistently useful, not only in regards to UX, but when considering all potential uses of AI. However, when it comes to UX research specifically, I would like to point out that humans can and do insert noise in the data as well. People have their innate “problems” and quirks when they participate in research: they can be dishonest, they can tick random answers without actually heeding the questions—all this can skew results.

With sufficiently sophisticated tools one day, we could have more control over the results. We could even simulate all these human quirks if we felt like it.

Facing reality, embracing benefits

What I’ve mentioned here is still just a vision of a future, and the tools we have now are, of course, less-than-ideal. However, I do think we’ll be seeing more and more of these AI tools in UX—they’ll become more ubiquitous as they become more sophisticated. And, yes, for some, that might be scary.

Of course, we can all be apprehensive of new technology, especially if it feels like it could replace us at our jobs. We can’t really dismiss the tools that have replaced people because they’re faster and less resource-intensive.

Taking a critical approach doesn’t necessarily mean simply having an aversion to a topic, like people often do when it comes to AI, but recognizing that every advancement in technology or the world in general isn’t without its flaws. And then, in order to understand how to improve something, we need to know exactly what its flaws are and how they can be fixed.

UX as a whole is still a long way from getting replaced by AI, because it’s so complex and unpredictable. It deals with humans at its core, after all. But the technology will continue to develop, whether we like it or not; we can’t really expect things to turn around now. We can’t go against the tide of progress, because we might end up on the wrong side of history. We need to extract the best from the reality we’re faced with—and, in the meantime, put ourselves in the conversation and voice our concerns out loud. By doing so, we can help in creating future tech that we’ll genuinely benefit from, instead of it giving us headaches.