From screens to Extended Reality: XR is transforming interfaces

Steps towards more intuitive HCI

From the advent of classic desktop computers, where the click of a mouse led to something happening on the screen in front of you, to the touch-centric evolution that defined how we interacted with smartphones and tablets, we have been witnesses to the steady pursuit of a more immersive and intuitive human-computer interaction (HCI).

Now ubiquitous and familiar, we’ve come to accept desktop computers and smartphones along with their user interfaces as normal and generally intuitive. Nevertheless, they’re not without some inherent limitations—they don’t exactly provide the best user experience across all possible use-cases and scenarios. Despite these shortcomings, we tend to overlook or tolerate them due to the numerous overall benefits they offer. Still, they certainly might not be here to last.

Touchscreens, with their convenience, flexibility, and intuitive nature of touch interactions, have already diminished the role of physical buttons. And now, with new advances in technology, there could be a reduced need for physical input devices altogether. This time around, the driving force for change will be Extended Reality (XR) technology and the emerging emphasis on Natural User Interfaces (NUIs).

Extended Reality tech utilizing Natural User Interfaces

As we’ve all seen, Apple announced one such mixed reality device, the Vision Pro headset, at WWDC 2023. Its spatial UI named VisionOS relies on eye navigation, voice dictation, and hand gestures like tapping to select and flicking to scroll. Thanks to infrared illuminators and side cameras though, users can interact even when their hands are resting down in their lap or casually at their sides, further liberated from traditional input devices and ushering in a new era of Natural User Interfaces. No need for touchscreens, much less keyboards and mice. At least in theory.

This device serves as a glimpse of the not-so-distant future; our physical environment melding with the digital, and the usual ways of interacting with computers and smartphones in their current form taking a back seat, or even becoming obsolete for a wide array of use cases.

But this is merely the tip of the iceberg. As headsets like the Vision Pro point us towards a future that embraces spatial information architecture along with NUIs, it becomes evident that we are on the brink of quite the transformation in everyday human-computer interaction; one that may truly redefine the way we interact with digital information. No longer confined to flat screens and pages, digital data is now in the process of integrating more and more into the fully dimensional environment surrounding us.

Why stop at XR headsets, though? Step by step, we’re moving towards some future tech that could some day end up resembling something very similar to full-blown, hyper-realistic virtual environments—maybe even holodecks.

(For all you non-Star-Trek-watching people/heretics, the holodeck is a holographic simulation room that can create highly realistic virtual worlds with computer-generated scenarios and characters which can be interacted with as if they were real.)

Just imagine setting foot in a room within your home or a shared immersive space, where motion tracking, haptic feedback, and advanced display technologies work together to create the ultimate mixed reality experience.

Still a long way from holodecks, though

As cool as the concept of the holodeck is, it’s, of course, still fiction and not really viable yet to replicate. Probably the most problematic part being tactile feedback and physical interactions.

In the holodeck, users can touch, feel, and interact with virtual creations as if they were real, and virtual entities can in turn have physical impacts on users. The holodeck can generate physical landscapes and structures along with different types of terrain, meaning the virtual environment is fully tangible. This is very much beyond the current capabilities of XR tech, where tactile feedback is limited and simulated through the use of (for now) mostly cumbersome equipment that stands in the way of a naturally-feeling experience. (There are some developments addressing this, though.)

Since holodecks are a step beyond VR/AR/MR technologies, they might best be described as “enhanced mixed reality” or “hyper-reality”. It’s a blend of the real and the virtual to such an extent that the boundaries between the two are virtually (pun intended) indistinguishable.

Obviously, no XR tech can currently (or potentially) provide the level of realism and immersion needed for holodecks. However, we can look to XR devices to serve as a potential intermediary which could give us an approximation of such an experience at least in some aspects, and relatively soon at that.

Navigating 3D spaces with gestures and movement

So, what would this XR experience look like in our current, starship-deprived reality? First, let’s talk about the specifics of navigating a 3D user interface when it comes to tech we have at present.

Generally speaking, in AR/VR/MR spaces, users are not confined to just clicking buttons or swiping screens; there’s a greater emphasis placed on gestures and body movement. Simple hand gestures can be used to trigger actions; for instance, a grabbing motion might be used to select an object, and a throwing motion to discard it. With the right equipment, users can use their whole bodies to interact, like turning their heads to look around, or walking through the virtual space. All these actions make for a richer gestural “vocabulary” than 2D interfaces offer.

This pronounced physicality of XR spaces opens up new possibilities for interaction design. Advanced motion tracking could extend this concept to more intricate gestures. Building on a set of distinct gestures that could correspond to different actions in the digital environment, specific sequences of gestures could then activate complex commands, much like syntax in a language. Users might end up expressing a wide range of actions and instructions by combining gestures in various ways, vaguely similar to some kind of rudimentary sign language.

Actions and behaviors emerging from human-computer interactions

Relying on specific gestures and enabling complex syntax means a certain degree of formalization is a prerequisite, as it helps establish consistency, which in turn makes the interface more predictable and learnable. If a specific gesture always leads to the same outcome, users can build a mental model of how the interface works much more readily.

This would be an example of a grammar of action, which relates to actions and behaviors—gestures, facial expressions, non-verbal communication methods—that emerge from human interactions with novel technologies. The potential lies in how these gestures can facilitate seamless human-machine interactions. Imagine a potential future Grammar of Action where a specific gesture ends up replacing a whole verbal instruction like “give me more info on this” or “put this into my files”, streamlining the interaction.

On the other hand, too much formalization can get in the way of intuitiveness; if users are required to remember and perform complex sequences of gestures to achieve basic tasks, the interface becomes cumbersome and frustrating to use. So, there should definitely be a degree of natural mapping between actions and outcomes in the virtual environment. For instance, the grabbing motion mentioned before is a natural way to select an object, and a throwing motion is a natural way to discard it—no need to replace these with more abstract gestures. This leads to an interface that feels more intuitive, even if there are formal rules governing the interactions.

One thing to note, though, is that different generations of people can have different ideas about gestures in the context of natural user interfaces, due to them living with different technologies throughout life. For instance, in one experiment older participants simulated turning up the volume as twisting an invisible knob, while those under 30 either lifted a palm or used a pinching gesture. This non-universality of gestures is something to keep in mind when designing natural user interfaces for specific target audiences or the general public.

Special XR rooms for a holodeck-esque experience

Gestures and movement for interacting with an XR user interface naturally means that there should be enough clear space around the user.

The Apple Vision Pro seems to have been developed primarily with at-home usage in mind, and people are probably going to use it mainly while lounging on the sofa—so, not exactly gesturing wildly or pacing much around the room.

But why not even go a step further?

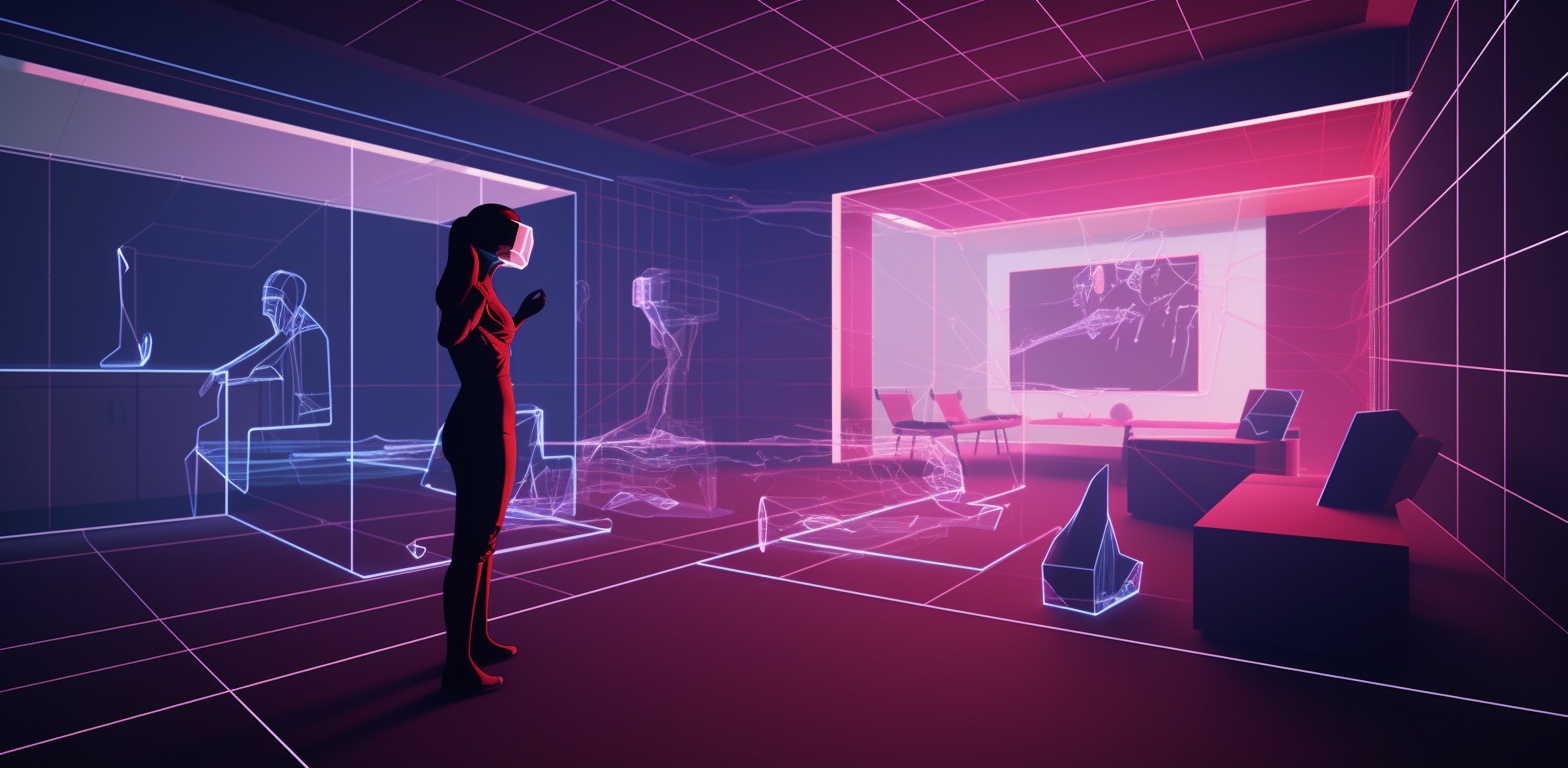

I could easily imagine that the future might also involve dedicated XR rooms in people’s homes or public spaces. These rooms would be designed to optimize the XR experience, at first for headset usage, but after a while perhaps with integrated tech which would facilitate motion tracking, haptic feedback, and advanced display technologies.

This would then be a bit more similar to the holodeck-like experience as mentioned before. People could step into their XR room and enter virtual worlds where they could interact with objects, environments, and other people (albeit still barring complete physical realism in interactions), whether it’s exploring exotic locations, participating in historical or fictional events, or, yeah, browsing clothes in a shop. Limitless possibilities!

A better way to shop or work online

However mundane an example it is though, online shopping would definitely be completely transformed. Instead of browsing through slews of pictures on webpages, users would enter virtual malls and shops and walk through aisles, pick up items, examine them closely, or even try them on virtually before making a purchase. Of course, there would also be virtual shop assistants or chatbots offering personalized recommendations and assistance, just like in a physical store.

It would really be interesting if there were some unobtrusive way of incorporating haptic feedback that would allow for feeling the texture, weight, and other physical attributes of the products virtually—the one thing sorely missing from our current tech. It would be practical if, when holding a virtual smartphone they may be intending to buy, a person might feel its shape and weight as if they were holding a real device.

The same concept of the XR room would be beneficial to various other environments, like educational and news platforms, as well as entertainment venues. Journalists and content creators could make use of this technology to transport their audiences to the heart of events for an immersive experience. (Would be nice if they skipped the ads, though.)

Virtual offices would make for a good use of the technology, too, especially if the XR room’s interface could be customized according to individual preferences. The system might scan the physical environment to map real-world objects into the virtual world to make them usable in the XR space, and thus create a seamless experience.

Conceptualizing spatial information architecture

Utilizing your physical, 3D workspace within an extended reality would likely have certain palpable benefits when it comes to cognition as well.

Traditional UIs are typically confined to the limited 2D screen space of a computer, smartphone, or tablet. Since VR and AR allow for more creative and spacious user interfaces, there is a reduced need for traditional menus and buttons. This freedom allows for more creativity and experimentation in the design process. Instead of relying solely on flat menus and buttons, information can now be organized and compartmentalized within a three-dimensional environment.

So, when it comes to AR information architecture, digital material is incorporated into the user’s physical surroundings. When designing augmented reality experiences, the information architecture is ideally developed to give people contextually relevant information that aligns with the user’s actual environment.

In contrast to 2D interfaces, the spatial organization of information in 3D space takes much more advantage of human spatial memory. The human brain is adept at remembering spatial relationships. By taking advantage of spatial memory, we can associate data with specific locations or objects within the 3D environment. You could have virtual shelves or stations where you could keep relevant files or tools, just like organizing physical objects spatially in the real world.

Navigating through such a virtual workspace with spatially organized data would certainly feel more intuitive and organic than folder-based structures on a 2D screen. The ability to interact with data in a physical, tangible way can make it feel more concrete and easier to understand.

The benefits of spatial perception and memory

This concept of spatial UIs taking advantage of human spatial memory evokes in my mind a certain parallel, which seems to me like it might reinforce the stance that the kind of information architecture that goes with it is beneficial to human cognition.

The same spatial memory mechanism is what makes the Roman Room and Memory Palace mnemonic techniques effective. The point of these is to mentally navigate through familiar physical locations—a room, or a whole house—and associate specific information with different objects within that space. This would theoretically mean that you would be able to mentally retrace your steps through the familiar location to recall the information linked to each object.

In an XR environment, users could essentially create their own virtual memory palaces; a virtual workspace with an immersive information storage system. Place your important documents on a virtual desk, your research materials on a virtual bookshelf, your project management tools on a virtual notice board. Each item’s location serves as a contextual cue, triggering memory recall and making it easier to find and organize information intuitively.

The validity of virtual experiences

Even without considering these cognitive benefits and impacts on productivity, having something akin to a holodeck seems like at least a great way to have fun. But, just like some other technologies we have now, their pervasive presence might not be completely innocuous in practice—psychologically, at least.

Now, with the integration of real-world objects and environments into virtual spaces, the boundaries between the two are becoming increasingly ambiguous. I think it’s interesting to think about how much of our identities and experiences are shaped by our interactions in virtual environments. What’s the validity of experiences within these mixed reality spaces, and do they hold equal weight to purely physical experiences?

When we engage with an immersive experience that merges the real and virtual aspects into one, aligning our senses spatially, the two worlds connect in our mind. This seemingly organic and authentic integration of the real and the virtual evokes a digital future that will not be limited to one or the other; instead it will encompass both, creating a single, unified reality.

So, there is no denying that virtual spaces have been becoming more substantial in meaning and gravity for quite a large segment of the world’s population for some time now, and this trend is only going to accelerate with XR. It’s hard to avoid the implication that people will probably have certain difficulties differentiating between the two, most likely leading to some unprecedented social impacts.

There’s another thing, too. Escapism could have a strong hold over some people and impact their psychological wellbeing. As these technologies become increasingly immersive, people might prefer to spend more time in virtual worlds to escape real-life challenges and problems. This might lead to social isolation and neglecting physical health, or difficulties in forming and maintaining meaningful relationships.

Digital data as part of our daily environment

The pursuit of intuitive HCI is leading us into an era where the tangible and virtual will overlap, where gestures might become language, and where spatial environments will surely redefine how we interact with information—and how we experience reality.

We will not merely be adapting to new methods of interaction. Data will not be just seen or heard, but lived and breathed. With this integration of the virtual into our daily lives, the way we understand and interact with information will become multidimensional. Data will cease to be something detached and abstract, confined to a device’s display; it will become an integral part of our immediate environment and our lived experience.

Perhaps there’s one other thing to keep in mind, especially when it comes to wearable AR tech of the future. The constant influx of digital information encroaching on our reality and trying to get our attention might mean we’ll find ourselves overwhelmed in a deluge of unsolicited digital distractions. The advancements in these technologies are exciting for sure; the risk of information overload and over-reliance on tech, well, maybe not so much.

While nothing should stop you from just removing the wearable tech and enjoying the peace and quiet, the fear of missing out is a strong beast for many, and might become even more of a challenge to shake off due to the amalgamation of the virtual and the real.

As always when it comes to technological innovations, our main challenge will be to harness the benefits without letting the tech dominate our lives.

At least we humans are known for our propensity for moderation, right?

… Oh well. Let’s just hope we don’t get flooded with even more ads.